In the “What is Farm” section we mentioned several types of workloads, such as Message Passing Interface (MPI) and multithreading. We will now explain how those workloads are related to the hardware and how we can optimize utilization of available resources. Note that applications that run in a non-optimal way may be order of magnitude slower. These are general recommendations and every program has to be carefully tuned.

The most important way to utilize resources is to tune use of a single node. The best way to do that is to prevent bottlenecks in the flow of data between the CPU and the memory. You might already know that that memory is slower than CPU, and therefore if there are more memory requests than computations, the computation rate will be limited by the "traffic" from and to the memory. There is also "latency" – a time difference between the request from memory and the arrival of the data. This is very much similar to internet "ping", "download" and "upload".

In order to discuss this, we first discuss of a popular way to utilize the cluster, MPI. MPI is a flexible standard that deals with "message-passing" between different processes. It is mainly used when a certain workload can be parallelized by dividing into many (nearly) identical tasks which are "loosely" coupled, i.e. requiring infrequent synchronization by sharing relatively small amounts of data (messages). The main reason that MPI is used it that parallelization is achieved by distributing tasks between MPI processes, which can be located on different nodes. Each MPI process needs at least one CPU to run, and most MPI enabled program can be run using varying amounts of processes. It is important to note, however, that the optimal number of MPI process is generally unknown. In other words, the workload manager is not aware of the best way to utilize the resources that you receive. As mentioned in the previous section, the minimal chunk of computational resources required for program execution contains 1 core and some memory. Even if one asks for several CPUs, the default number of MPI processes per resource chunk is 1. The user, knowing the program workflow, has to define an optimal number of MPI processes to request. As a rule of thumb, an MPI application should run processes that only exchange small messages, and it is best to set the number of MPI processes to be equal to the number of cores requested.

For programs with high memory usage it may thus be better to run less MPI processes per compute node than the number of available CPUs. However, all the cores have to be requested, otherwise other users may receive them as resource for their jobs.

Advanced users may search for more information by googling for NUMA nodes practices.

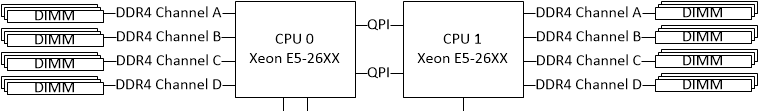

Here we address the most important optimization considerations. Below is the scheme of CPU memory connection in a dual-socket compute node:

From this scheme we learn, that even though the processors are interconnected, each set of CPU-cores has a memory directly connected to it. Memory that is placed further away from the CPU should not be constantly used by that CPU, since the extra traffic and increase latency will limit the calculation rate by a great deal.

For example, running a 16 process MPI job on a 24 CPU node will most probably cause a problem with memory access.

Finally, if your program uses more than one CPU per MPI process via use of multithreading or OpenMP (or is a non-MPI program with multi-core capability), you will probably notice that programs do not speedup linearly with increasing number of cores, i.e. a program running time t on 8 cores will not finish on 16 cores in t/2. This is mainly because the memory access latency by threads running on different physical CPUs is different. It is also a case with memory hungry MPI processes; using less CPUs than actually requested may speed up the program. Note that default value of the number of threads is equal to the number of cores requested.

Once single node usage is optimal, we can start experimenting with multinode jobs. Usually, of-the-shelf software (free, academic, or commercial) has best practice guides for your consideration.

In addition to the resources related to parallel computing, a user can ask for specific computational resources, such as Graphics Processing Unit (GPU), if the application can utilize it.

Specific examples for resources are given in the next section.