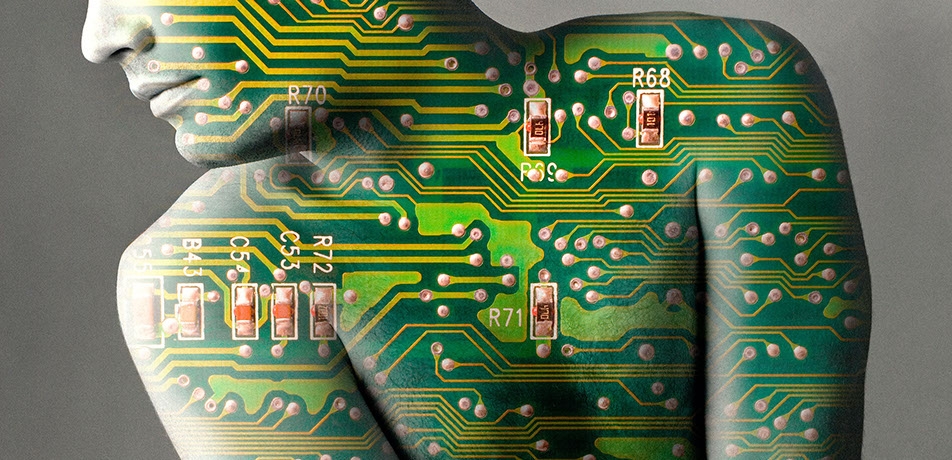

The evolving man-machine partnership

Institute scientists fuse the best of both worlds

Features

Contrary to popular belief, Lorem Ipsum is not simply random text

As much as we love our computers, we humans—not the systems we design—have always maintained the upper hand. At the Weizmann Institute, this “power relationship” is now being re-examined.

A number of labs are recalibrating the man-machine connection, by demonstrating how computerized systems can enter into beneficial partnerships with human behaviors related to critical thinking, sight, and even the way we breathe.

As new technologies emerge, the relationship between man and machine grows closer, offering options for injecting automation into a vast range of human activities. At the same time, they open up fascinating questions about how the devices making our lives faster, more accurate, and more powerful, affect society. By pioneering the principles and methodologies that propel the emerging manmachine partnership, Weizmann Institute researchers are contributing design principles for the discoveries yet to come.

Wisdom to work together

“Since the beginning of the computer age, programming has been based on telling a machine exactly what to do, so that the machine can serve us,” says Prof. David Harel, a member of the Department of Computer Science and Applied Mathematics. “But we believe that the time has come to shift the balance between man and machine.”

Prof. Harel is the creator of breakthrough visual languages for systems design - “Statecharts” in 1984 and “Live Sequence Charts” (LSC) in 1998 - which are credited with “liberating” programmers and engineers, by dramatically simplifying the translation of abstract ideas into formal computer code. Now, together with Dr. Assaf Marron and other members of his research team, he has set his sights on launching what he believes will be systems design’s next revolution. It’s an approach he calls “Wise Computing”, in which the computer actually joins the development team as an equal partner - knowledgeable, concerned, and proactively responsible.

Wise Computing involves an intelligent software engineering environment that provides what professional systems developers have come to expect - powerful tools for programming and analysis. In addition, however, it imbues the development suite with knowledge of engineering principles, as well as comprehensive information about the specific, realworld domain in which the system will eventually be used. This gives the computer “experience” to draw on, enabling it to actively contribute to the design process - something that was previously the exclusive purview of human programmers and domain experts.

“Based on this approach,” Prof. Harel says, “we enable the computer to participate in an ongoing dialogue. Not only does the computer monitor the human programmer’s decisions in real time, it actually takes part in the decision-making process, proactively guiding the programmer towards improved outcomes.”

The new approach also mimics and applies powerful capabilities that humans possess, but which are not specific to systems development. “In Wise Computing, the design suite spontaneously notices unexpected patterns in the system’s behavior, and categorizes them as desired or undesired,” Dr. Marron explains. “It can then propose tests and solutions for detected problems. This unprecedented functionality is possible because at least some of the knowledge, skills, and experience of human experts has been incorporated into to the design tool itself.”

Prof. Shimon Ulman

Wise Computing can also reduce errors. “Rather than compose a million lines of code then attempt to carry out after-the-fact verification to check how they work together, Wise Computing will help designers get the overall design right far earlier,” Prof. Harel says.

Another advantage of the approach is its sophisticated use of natural language. Together with his former PhD student, Dr. Michal Gordon, Prof. Harel was able, for the first time, to make it possible to use reasonably rich English to write fully executable LSC programs. According to Prof. Harel, this approach represents an important step forward in getting computers to understand the way humans naturally communicate, rather than requiring sets of instructions rendered in formal programming language.

“The computer translates the results of this ‘conversation’ into code automatically,” Prof. Harel says. “Our demo already shows how humans and computers can share responsibility for the creation of a computerized system. But the conversation has really just begun.”

Seeing over the horizon

Computerized vision - think security sensors and the way digital cameras automatically outline faces - is also creeping closer to human capability. Israel Prize winner Prof. Shimon Ullman of the Institute’s Department of Computer Science and Applied Mathematics recently identified how the analysis of neural processes governing how the human brain processes visual stimuli may eventually contribute to the creation of more powerful computer vision systems.

The Ullman lab has spawned scientists who have gone on to develop new technologies based on the understanding of sight. For instance, his former student, (Prof.) Amnon Shashua of the Hebrew University, founded Mobileye, the automotive computer-vision startup that went on to become the most successful IPO in Israeli history.

The current story begins with Amazon Mechanical Turk, a research platform used by scientists and technology developers to test their ideas over the Internet, by paying each member of a large cadre of participants a small fee for completing webbased tasks. Prof. Ullman used Mechanical Turk to ask thousands of people to identify a series of images; they were pitted against computers that were programmed to do the same.

Some of these images were successively cut from larger ones, revealing less and less of the original. Others had successive reductions in resolution, with accompanying reductions in detail. Prof. Ullman’s team found that humans performed better than computers at identifying partial- or low-resolution images. They also noticed something else: that when the level of detail was reduced to a very specific degree, nearly all the human participants lost their ability to identify the image.

According to Prof. Ullman, this sharp dividing line between recognition and non-recognition indicates a visual recognition hurdle that is structurally hard wired into the human brain. He says that while current computer-based models seek to achieve human-like vision, they do not yet incorporate anything that approximates the human “cut-off point” that he discovered. In short, he says, to truly mimic human sight, there is more work to be done.

“The results of this study indicate that no matter what our life experience or training, object recognition works nearly the same way for everyone,” Prof. Ullman says. “The findings also suggest there may be something in our brains that is tuned to optimally process images with a minimal amount of visual information, in a way that current computer models cannot replicate. Such ‘atoms of recognition’ could prove valuable tools for brain research, as well as for developing improved computer and robotic vision systems.”

And speaking of better computer vision, Prof. Ullman’s research also hints at a potential engineering strategy.

“The difference between computer and human visual recognition capabilities may lie in the fact that computer algorithms adopt a ‘bottom-up’ approach that moves from simple features to complex ones. Human brains, on the other hand, work in ‘bottom-up’ and ‘top-down’ modes simultaneously, by comparing the elements in an image to a sort of model stored in memory,” Prof. Ullman explains. “Perhaps, by incorporating this bi-directional analysis into automated systems, it may be possible to narrow the performance gap between humans and computers, and make computer-based visual recognition better.”

Sniff and go

In another Weizmann Institute lab, neurobiological studies of another human sense - smell—inspired the creation of a new technology for humans who need mobility assistance: a sniffcontrolled motorized wheelchair. This patent-pending invention emerged serendipitously from the laboratory of Prof. Noam Sobel, an expert in the way the brain processes olfactory signals.

“We had been examining sensitivity to particular smells, using an experimental setup in which the sniffing action in participants’ noses caused a device to emit an odor,” says Prof. Sobel, former head of the Weizmann Institute’s Department of Neurobiology. “It suddenly dawned on us that this nasal ‘trigger’ could be used as a fast and accurate switch for controlling other devices—and that it might be particularly useful for people with physical disabilities.”

In 2010, he put his hunch into action, by applying “nose power” to the navigation of motorized wheelchairs. He created a two-sniff code in which two inward sniffs propelled the chair forward, and two outward sniffs reversed it. Different combinations of in-and-out sniffing sent the chair to the right or left. After just 15 minutes of training, a man paralyzed from the neck down successfully drove his wheelchair around an obstacle course—an achievement that made headlines worldwide.

More recently, Prof. Sobel’s team reported that human “sniff control” can be combined with computational technologies to solve another problem associated with physical paralysis: the inability of individuals with paralysis to muster the coordinated muscle power needed to produce an effective cough.

“People with high spinal cord injuries typically depend on caregivers to manually assist in coughing by pressing against their abdominal wall,” says Dr. Lior Haviv, a staff scientist in Prof. Sobel’s lab. “While some technologies exist for stimulating abdominal muscles with surface electrodes, the success of these methods depends on very precise timing. With the use of the sniff controller, our lab significantly improved the ability of people with spinal cord injuries to cough effectively, and without assistance.”

Dr. Haviv - who himself uses a wheelchair as the result of a bicycle accident 12 years ago - explains that the “key to the cough” was the exact measurement of nasal airflow. They measured the peak force of the expelled air produced by coughing in subjects with a high spinal cord injury, whose cough was externally stimulated. “While all the assisted techniques we studied improved airflow to a similar degree,” he says, “the sniff-control method was the only one that could be activated even by those individuals with severe spinal cord injury. This provides independence that is a critical factor for quality of life.”

Prof. David Harel is funded by The Benoziyo Fund for the Advancement of Science, Braginsky Center for the Interface between Science and Humanities, The Willner Family Leadership Institute for the Weizmann Institute of Science. Prof. Harel is the incumbent of the William Sussman Professorial Chair of Mathematics.

http://www.wisdom.weizmann.ac.il/~dharel

Prof. Noam Sobel is funded by Adelis Foundation, The Norman and Helen Asher Center for Brain Imaging, which he heads, The Azrieli National Institute for Human Brain Imaging and Research, which he heads, The late H. Thomas Beck, The Nella and Leon Benoziyo Center for Neurosciences, which he heads, The Carl and Micaela Einhorn-Dominic Institute for Brain Research, which he heads, Nadia Jaglom Laboratory for the Research in the Neurobiology of Olfaction, Lulu P. & David J. Levidow Fund for Alzheimer's Disease and Neuroscience Research, James S. McDonnell Foundation 21st Century Science Scholar in Understanding Human Cognition Program, Rob and Cheryl McEwen Fund for Brain Research. Prof. Sobel is the incumbent of the Sara and Michael Sela Professorial Chair of Neurobiology.

http://www.weizmann.ac.il/neurobiology/worg

Prof. David Harel

Dr. Anton Plotkin in the lab of Prof. Noam Sobel, testing the sniff-controlled motorized wheelchair.