-

Movers project

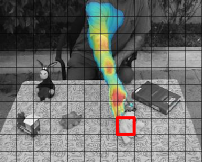

Movers projectThe goal of this project is to explore the learning mechanisms, that underly the rapid developement of visual concepts in humans, during early infanthood.

Specifically, we suggest a model for learning to recognize hands and direction of gaze from unlabeled natural video streams.

This is a joint work with N. Dorfman. -

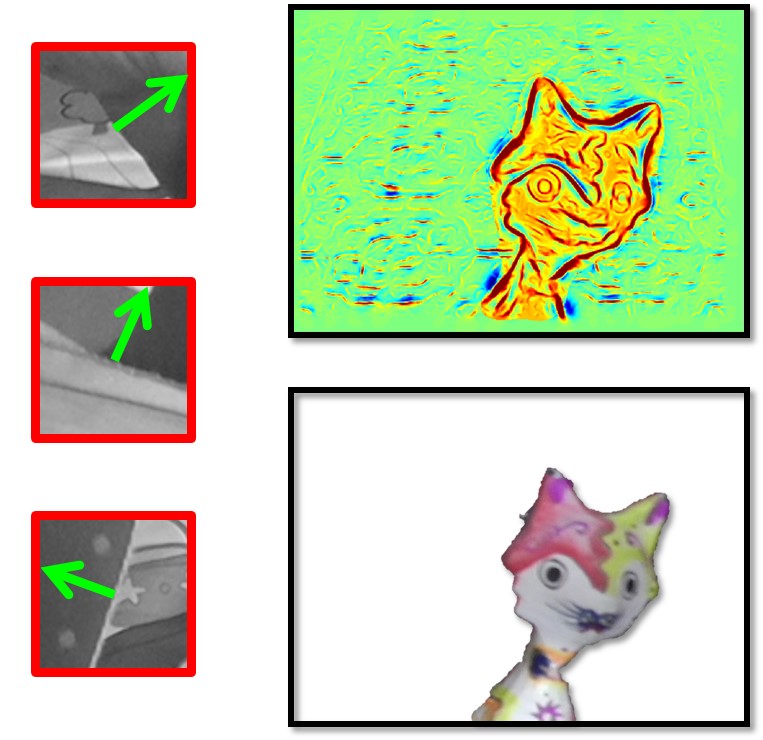

Object Segregation project

Object Segregation projectObject segregation is a complex perceptual process that relies on the integration of multiple cues. This is a computationally challenging task in which humans outperform the best performing models. However, infants ability to perform object segregation in static images is severely limited. In this project we are interested in questions such as: How the rich set of useful cues is learned? What initial capacities make this learning possible? Our model initially incorporates only two basic capacities known to exist at an early age: the grouping of image regions by common motion and the detection of motion discontinuities. The model then learns significant aspects of object segregation in static images in an entirely unsupervised manner by observing videos of objects in motion.

-

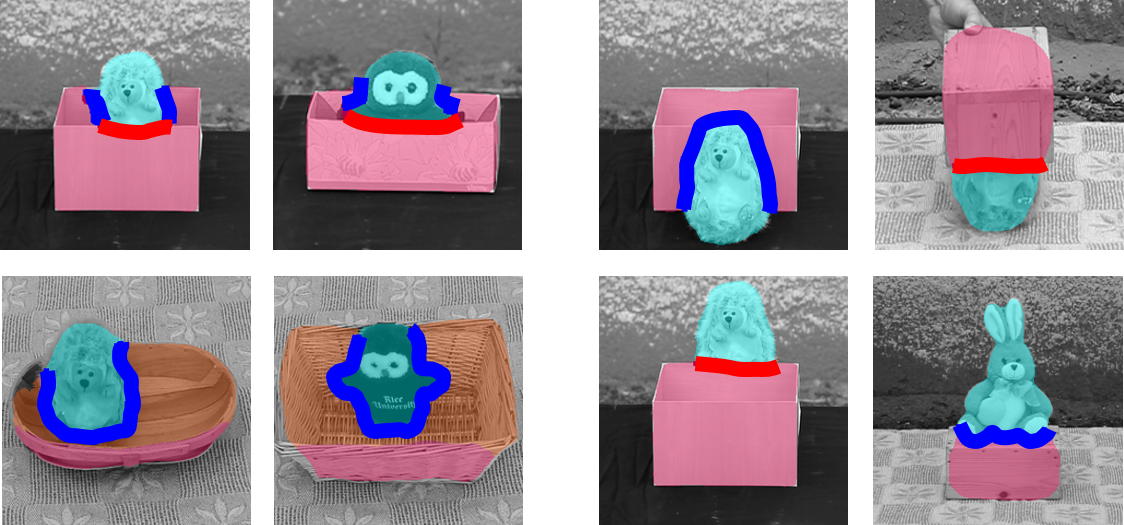

Containment project

Containment projectHuman visual perception develops rapidly during the first months of life, yet, little is known about the underlying learning process, and some early perceptual capacities are rather surprising.

One of these is the ability to categorize spatial relations between objects, and in particular to recognize containment relations, where one object is inserted into another. This lies in sharp contrast to the computational difficulty of the task.

This study suggests a computational model that learns to recognize containment and other spatial relations, using only elementary perceptual capacities. Our model shows that motion perception leads naturally to concepts of objects and spatial relations, and that further development of object representations leads to even more robust spatial interpretation. We demonstrate the applicability of the model by successfully learning to recognize spatial relations from unlabeled videos.

Computer Vision and Visual Intelligence