Integration of information from different sensory modalities is essential for faithful internal representation of objects. Different senses represent various aspects of the surrounding world. They operate at different time scales and integrate information presented at varying distances from the body. For example, tactile sensation enables sensing very proximal objects, whereas the auditory system also allows us to sense very distant objects. We often sense objects simultaneously using two or more modalities, improving accuracy and speed when performing different tasks. For example, visually-impaired people that use a white cane are trained to identify the texture of different surfaces using both somatosensation and audition arising from the interaction between the cane and the surface.

Although the mechanisms of multimodal integration have been studied in rodents, the experimentalists usually provided unrelated stimuli from two or more modalities. They asked how one modality is modulated by the presence of stimulation of the other modality.

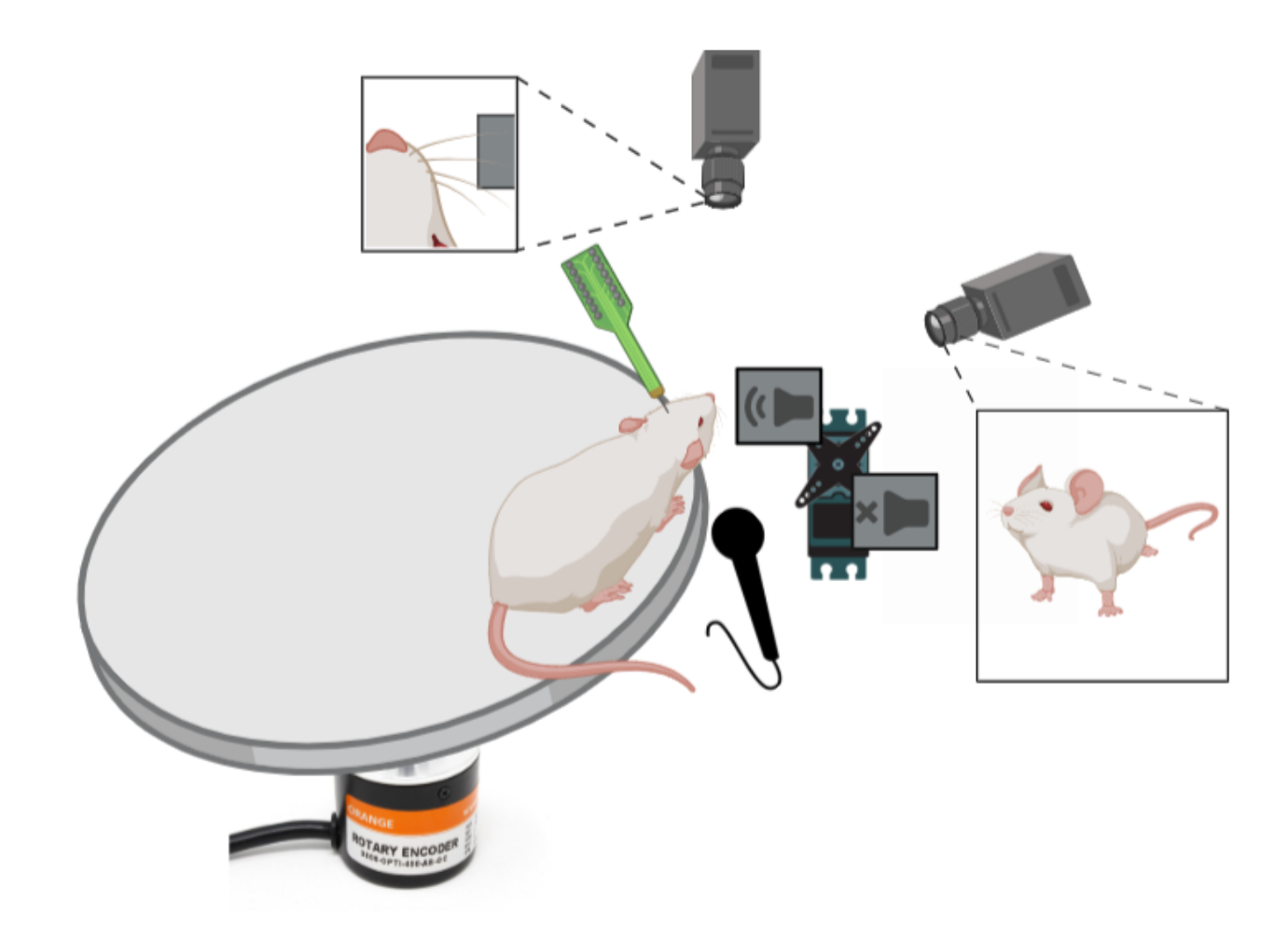

Our method uses self-generated multi-modal stimulus, which allows us to investigate multi-modal integration in mice without artificial stimulation sources but instead by using a natural whisking behavior.

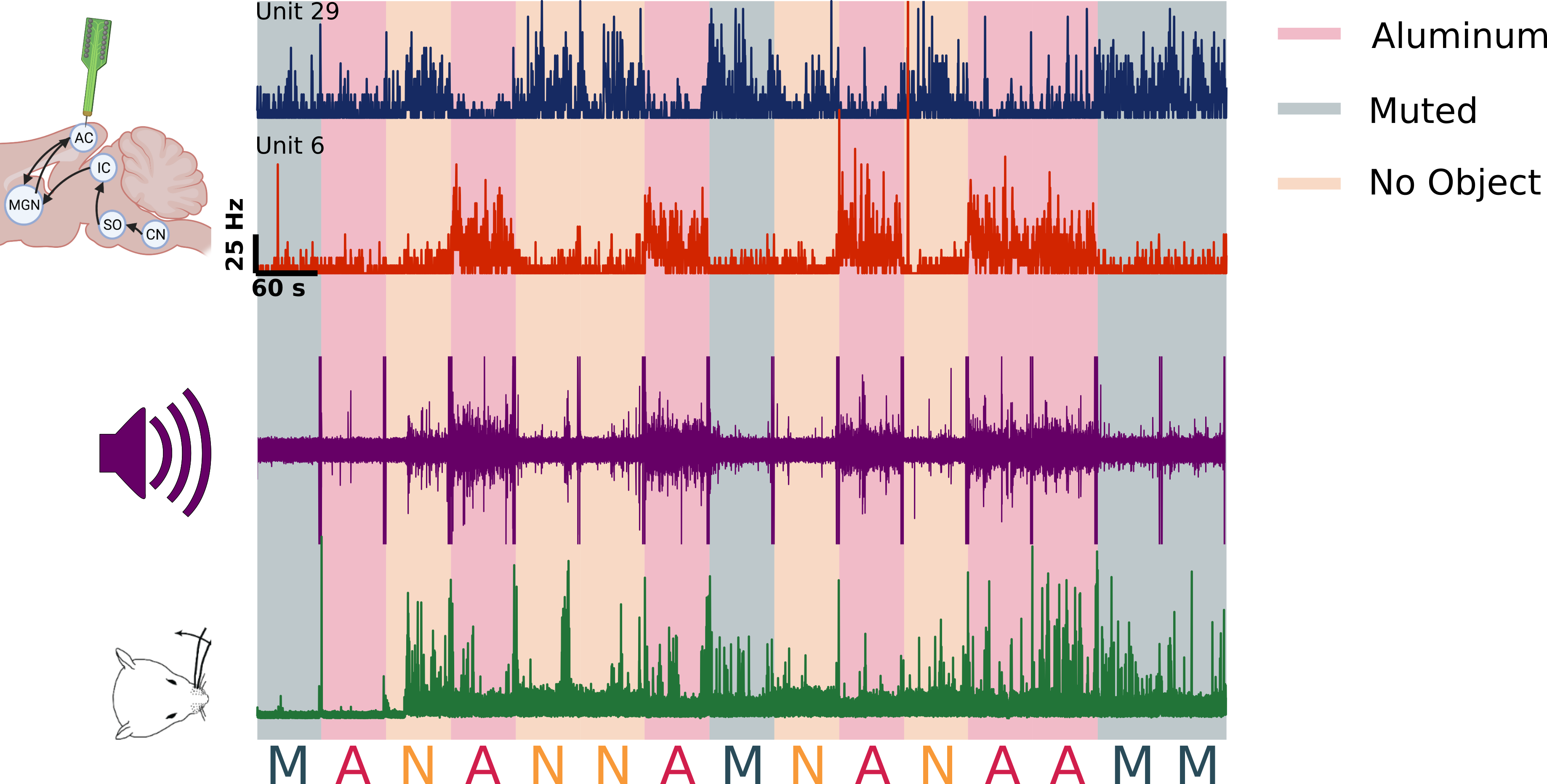

We simultaneously record extracellular activity from hundreds of neurons in multiple cortical sensory areas using the neuropixel system. We combine the recordings from hundred of cells with novel methods to quantify behavior and movement.

Combining cutting-edge extracellular recording methods, behavior, and our newly developed experimental design will allow us to investigate multi-modal integration in the cortex thoroughly.