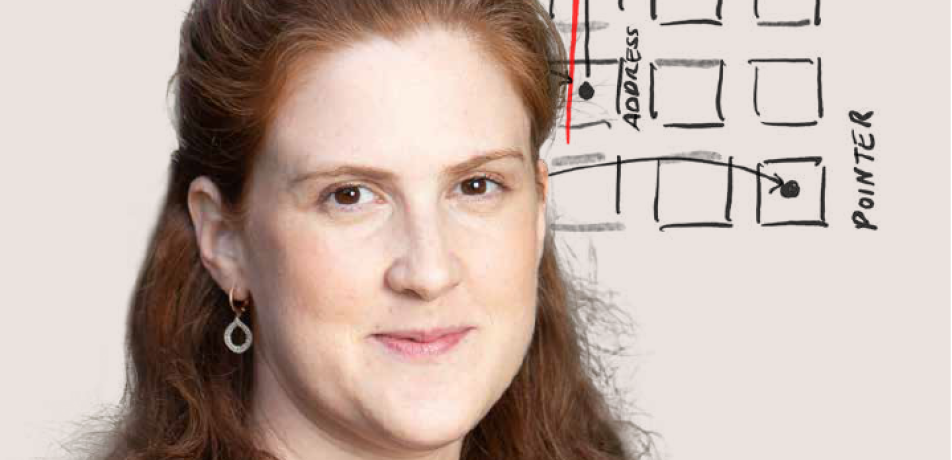

Getting into the "mind" of the machine

Dr. Tamar Rott Shaham is demystifying AI neural networks

New scientists

Is it possible to get an artificial intelligence system to reveal its most closely held secrets? Dr. Tamar Rott Shaham, a recent recruit to the Weizmann Institute’s Department of Computer Science and Applied Mathematics, believes the answer lies in treating AI systems not as inscrutable black boxes, but as scientific objects that can be studied, tested, and probed. Her research develops new methods for systematically investigating how modern neural networks operate—and how their behavior can be improved.

“Everyone is talking about the coming AI revolution, but the process is already well underway,” says Dr. Rott Shaham, who is set to arrive on campus in the fall of 2026 after she completes her postdoctoral fellowship at the Computer Science and Artificial Intelligence Laboratory (CSAIL) at the Massachusetts Institute of Technology.

As a postdoc, she developed methods to understand, control, and enhance AI models, with a particular focus on neural networks―machine learning models used in many of today’s most capable AI systems.

Operating in the background of many essential technologies, neural networks are computational tools capable of learning complex patterns and rules from data and using these to guide their behavior on new, unseen inputs. As Dr. Rott Shaham explains, these networks go far beyond determining what movies Netflix will suggest for Saturday night.

“AI models increasingly play a role in decisions that affect everything from healthcare to finance to national security, and in some cases solve problems better and faster than humans can,” she says. “Yet despite their success, we still don’t fully understand how these models represent information internally, or how that information is used when they encounter new situations. Because of these gaps in our knowledge, we are limited in our ability to enhance the performance of neural networks or prevent undesired outcomes. This reduces trust and can even create risk.”

AI you can rely on

To address this problem, Dr. Rott Shaham and her MIT colleagues created an AI model capable of interrogating and de-mystifying neural network function. What’s unique about their approach is that, rather than chronicling the chain of events that allows neural networks to make specific individual decisions, the model reveals the fundamental structures, logic, and syntax that allow such computational behaviors to emerge in the first place.

“Our model mimics investigations done by human scientists,” Dr. Rott Shaham explains. “It generates hypotheses about how a neural network might be functioning, then tests the hypotheses by initiating experiments and tracking network internal operations and outputs in real time. Ultimately, this approach might contribute to technologies capable of diagnosing neural networks’ potential failures, hidden biases, or unexpected behaviors, even before a model is deployed. This would represent a significant step forward in AI research by making AI systems more understandable and reliable.”

“The goal isn’t to claim complete understanding,” she emphasizes. “It’s to make the process of studying these systems more systematic, rigorous, and scalable. That’s an important step toward building AI systems we can rely on.”

“By opening the black box of neural networks, it becomes possible for computer scientists to ‘borrow’ mechanisms that enable specific functions,” she says. “This expands the toolbox available to human designers.”

A question-driven kid

Dr. Rott Shaham was raised in a small town in northern Israel, where her family life gave her early exposure to both science and technology.

“My mother, a PhD, worked in biological research, and my dad was a mechanical engineer,” she says, adding that she was always encouraged to ask how things worked, how they might be taken apart, and how they could be put back together. “I was a question-driven kid. This is something that still accompanies me in my work as a research scientist.”

In recent years, the Weizmann Institute has launched several programs designed to promote the integration of AI into scientific research of all kinds, and Dr. Rott Shaham couldn’t be happier to be part of the process.

“Weizmann is a collaborative environment that actively encourages cross-disciplinary research,” she says. “I’m particularly interested in applying these tools beyond AI. For instance, researchers who study biological brains and those who study artificial neural networks often confront similar challenges: both seek to understand complex systems that learn from data. There is significant potential in bringing these perspectives together.”

EDUCATION AND SELECT AWARDS

• BSc (2015) and PhD (2022), Technion-Israel Institute of Technology

• Postdoctoral Fellow, Computer Science and Artificial Intelligence Laboratory (CSAIL) at the Massachusetts Institute of Technology (2022-2025)

• Women Techmakers Scholars Program by Google (2019), Eric and Wendy Schmidt Postdoctoral Award for Women in Mathematical and Computing Sciences (2022), Best Paper Award Marr Prize at the International Conference on Computer Vision (2020), Adobe Research Fellowship (2020), Rothschild Fellowship from Yad Hanadiv (2021), Zuckerman STEM Leadership Program (2022)