Research

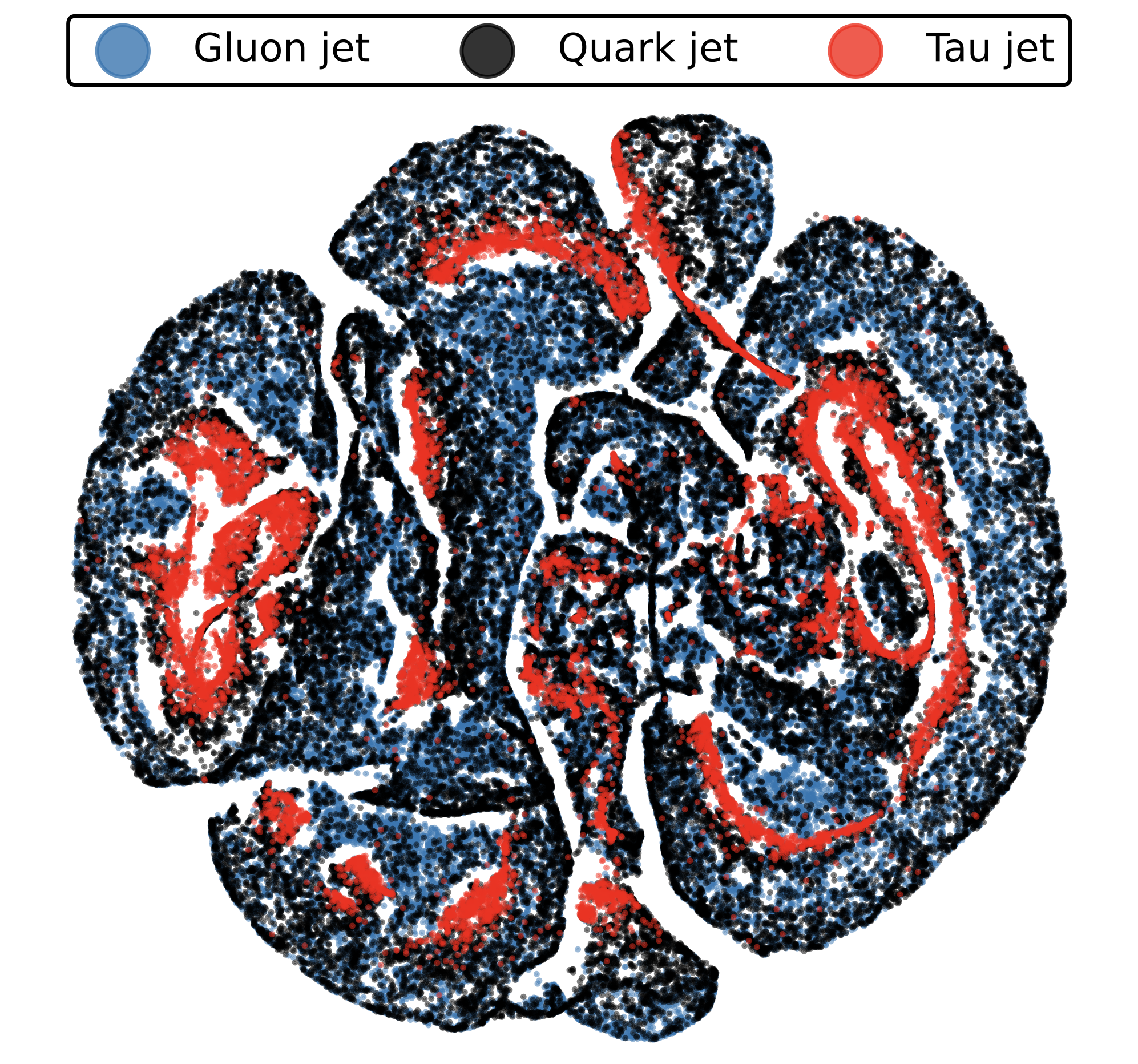

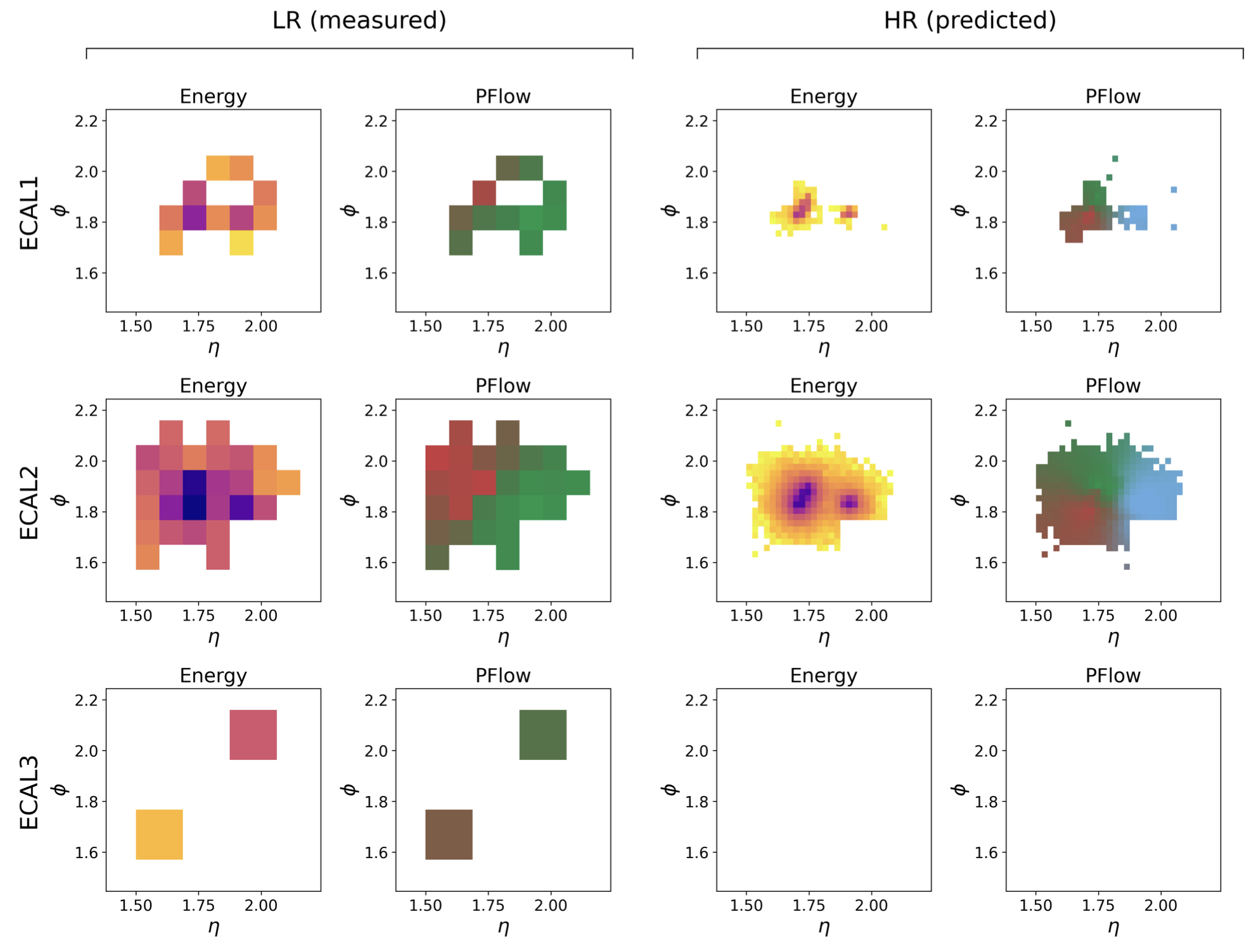

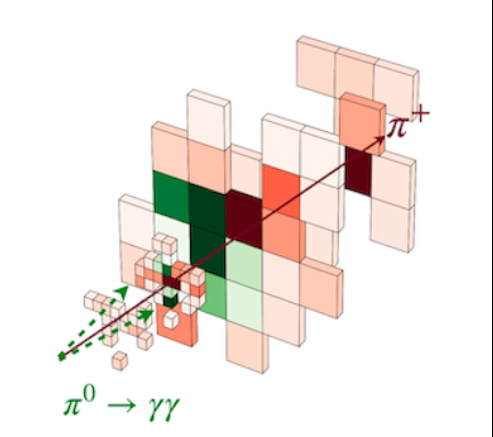

Our group is applying advanced Machine Learning techniques for physics-related problems. Examples are, Deep Learning NN for Heavy Flavour Tag, Graphs for Vertex detection, U-Net for Particle Flow, Autoencoders for Detector Monitoring etc... We are also involved with ATLAS Higgs Physics analysis, in particular, VHcc and Hc; both related to the Higgs to Charm coupling.

Research page