Prosody, the music of speech (pitch, duration, loudness and timbre) carries the non-verbal part of language: emotion, attitude, emphasis, conversation action, power relations etc. Compare, for example, “I’m a doctor” (someone insisting on their title) to “I’M a doctor” (me and not someone else). Although the words are identical, the message is not.

Prosody is, then, a crucial source of information, both for understanding speaker intentions and for communicational context. Notably, it remains largely unexploited in speech technology. This is due to theoretical gaps as well as difficulties in signal processing. The Prosody Lab at WIS is developing methods that take on both challenges: we provide tools to enhance speech- and language-dependent applications - dialogue systems, call centers, automatic translation - in reading human situations.

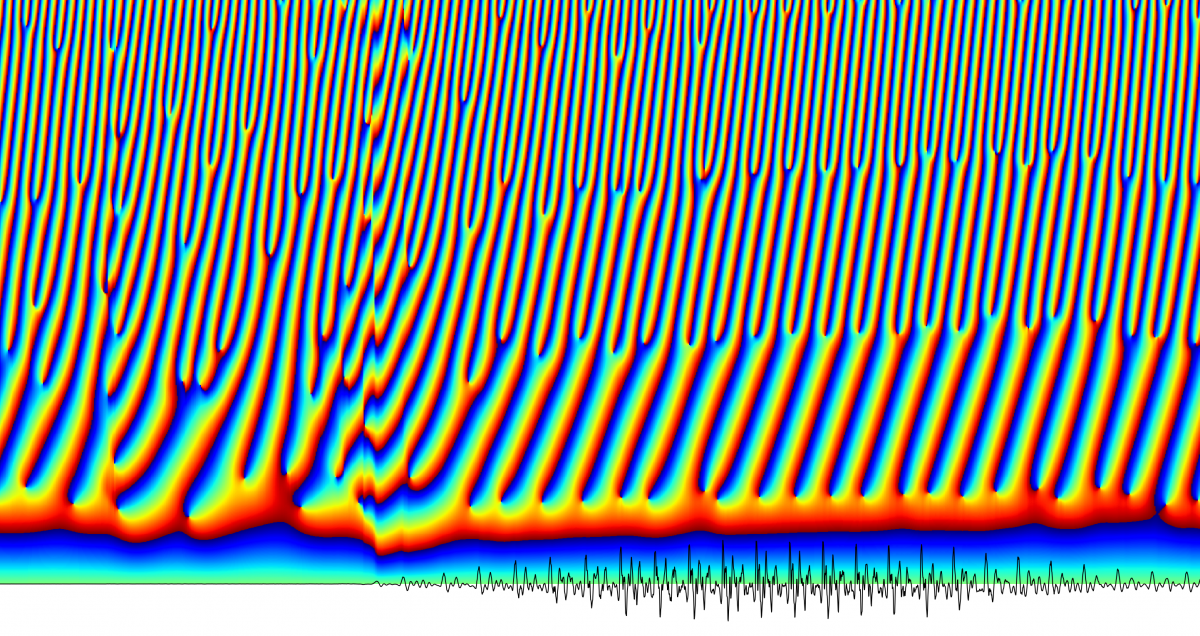

Our approach is based on detecting naturally produced prosodic units. Our first algorithm detects the boundaries of these meaning units. The algorithm is surprisingly easy to implement over the output of ASR systems, and can be used to improve their accuracy.

The latest achievement is an unsupervised classification of prosodic units. With no human labelling, based on prosody alone, the classifier clusters together hesitations; questions; sarcastic questions; chunks of high speaker involvement; and so on. Thus, a “concordance” of prosodic patterns is created. Furthermore, we are exploring higher-level prosodic structures—sequences of unit types—which become our prosodic syntax.

Integrating prosody into speech recognition systems can revolutionize natural language processing. Imagine an app that would announce non-verbal intentions in conversation (e.g., “the other person is uneasy and awaiting your response on X”), or an auto-translator that creates the correct syntax according to your intonation, or even a Siri that uploads soothing flowers when you sound upset.

Automatic detection of prosodic boundaries in spontaneous speech

Biron T., Baum D., Freche D., Matalon N., Ehrmann N., Weinreb E., Biron D. & Moses E. (2021) PLoS ONE. 16, 5, e0250969.

PDF